Decision Tree OM: A Comprehensive Guide

Decision trees have been a staple in the field of machine learning for decades. They are simple yet powerful tools that can be used for both classification and regression tasks. In this article, we will delve into the intricacies of decision tree om, exploring its various aspects and applications.

Understanding Decision Trees

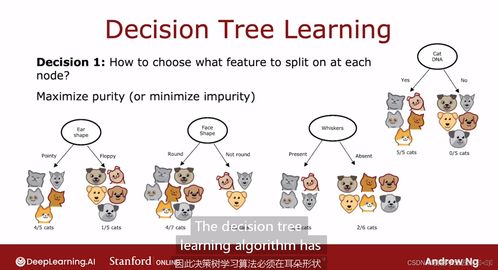

Decision trees are a type of supervised learning algorithm that can be used to make predictions based on a set of input features. They work by creating a tree-like model of decisions and their possible consequences. Each node in the tree represents a feature, and each branch represents a decision rule. The leaves of the tree represent the final decision or prediction.

Decision trees are particularly useful because they are easy to interpret and understand. They can be visualized as a flowchart, making it simple to follow the decision-making process. Additionally, decision trees are capable of handling both categorical and numerical data, making them versatile for a wide range of applications.

Types of Decision Trees

There are several types of decision trees, each with its own strengths and weaknesses. The most common types include:

| Type | Description |

|---|---|

| Classification Tree | Used for predicting categorical outcomes. For example, predicting whether an email is spam or not. |

| Regression Tree | Used for predicting continuous outcomes. For example, predicting house prices based on various features. |

| Random Forest | A collection of decision trees that are trained on different subsets of the data. This ensemble method can improve the accuracy of predictions. |

| Gradient Boosting | Another ensemble method that builds trees sequentially, with each tree trying to correct the errors made by the previous trees. |

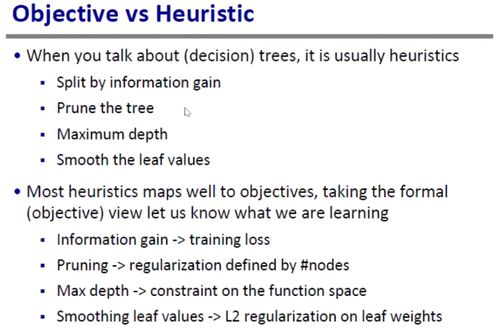

Building a Decision Tree

Building a decision tree involves several steps:

-

Select the best feature to split the data on. This is typically done using a metric such as Gini impurity or information gain.

-

Split the data based on the selected feature. This creates two new nodes in the tree.

-

Repeat the process for each of the new nodes, selecting the best feature to split the data on each time.

-

Stop splitting when a certain condition is met, such as a minimum number of samples or a maximum depth of the tree.

Pruning Decision Trees

Decision trees can become overfit if they are too deep or complex. To prevent this, we can prune the tree by removing branches that do not contribute much to the overall accuracy of the model. There are several pruning techniques, including:

-

Post-pruning: Pruning is done after the tree has been fully grown.

-

Pre-pruning: Pruning is done during the tree-building process, limiting the depth of the tree.

-

Cost-complexity pruning: Pruning is done based on a cost-complexity measure, which balances the trade-off between model complexity and accuracy.

Applications of Decision Trees

Decision trees have a wide range of applications in various fields, including:

-

Medical diagnosis: Predicting the likelihood of a patient having a certain disease based on their symptoms and medical history.

-

Financial risk assessment: Identifying potential risks in credit scoring and fraud detection.

-

Marketing: Predicting customer behavior and segmenting customers for targeted marketing campaigns.

-

Image recognition: Classifying images into different categories, such as identifying objects in an image.

Conclusion

Decision trees are a valuable tool in the machine learning arsenal. Their simplicity and interpretability make them a popular choice for many applications. By understanding the various types, building techniques, and pruning methods, you can harness the power of decision trees to make accurate predictions and solve complex problems.